1. Cost Function

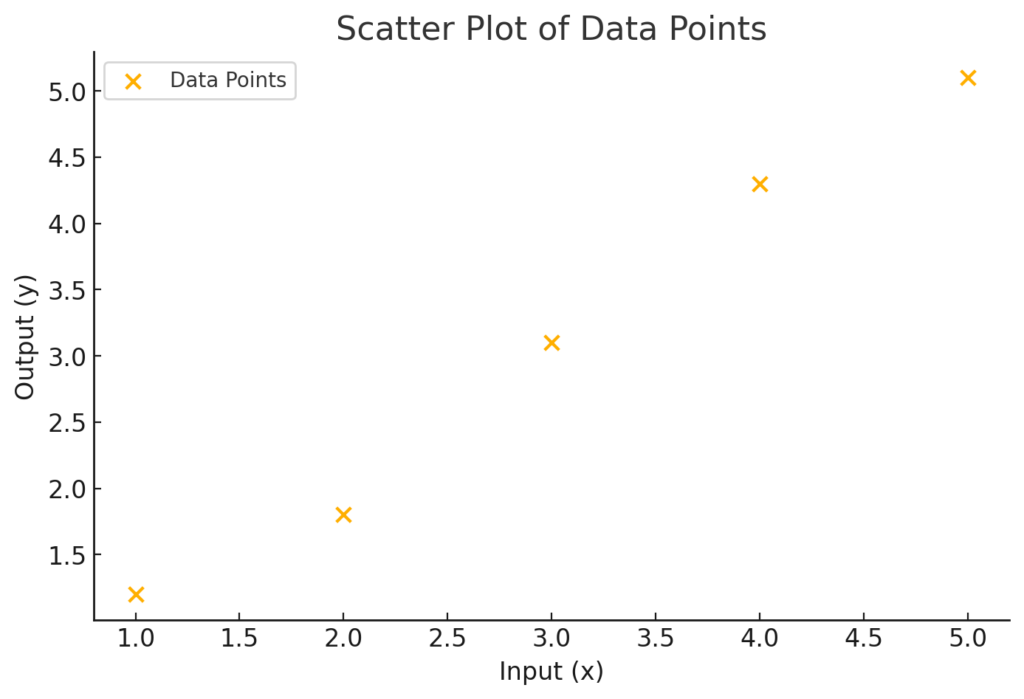

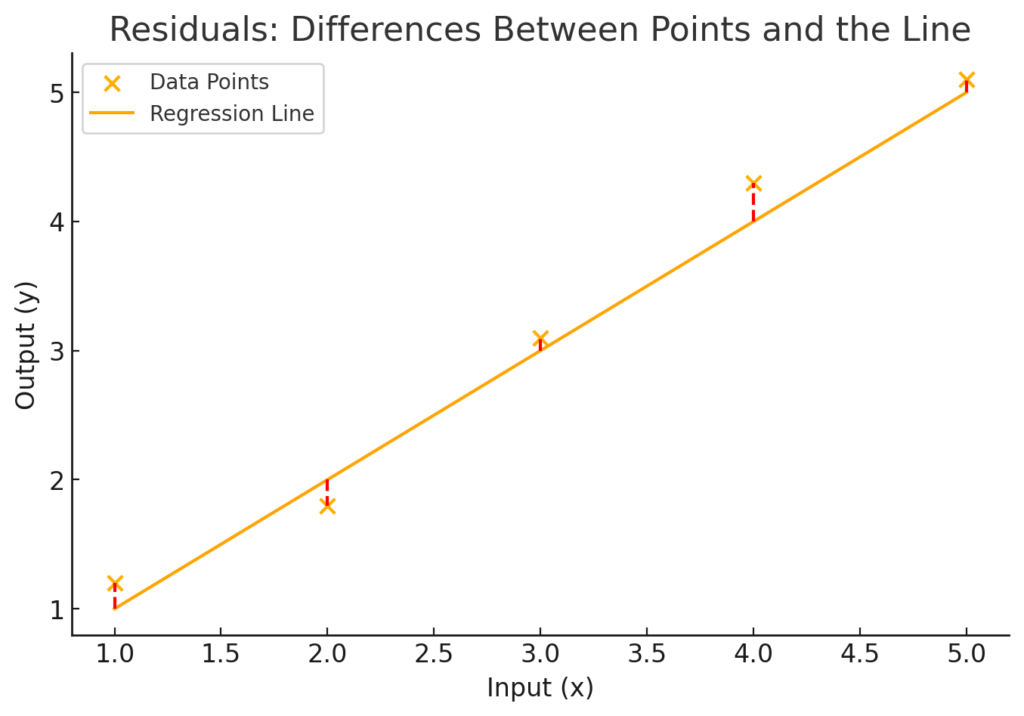

The cost function to minimize is the sum of squared errors between the observed (\(y_i\)) and predicted (\(\hat{y}_i\)) values:

It is also written as

Substitute the predicted value \( \hat{y}_i = mx_i + b \):

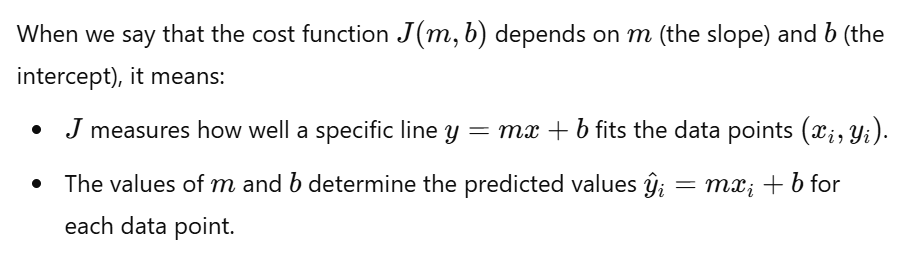

You can visualize the cost in a 3d plane against slope and intercept

2. Partial Derivative with Respect to \(b\)

Apply chain rule. Expand the square term (only terms involving \(b\) matter):

Applying derivative across summation

Set the derivative to 0 to minimize the cost

Solve for b

Expand the right hand side

Note

3. Partial Derivative with Respect to \(m\)

Now, take the derivative of the cost function with respect to \(m\):

Apply chain rule. Expand the square term (only terms involving \(m\) matter):

Applying derivative across summation

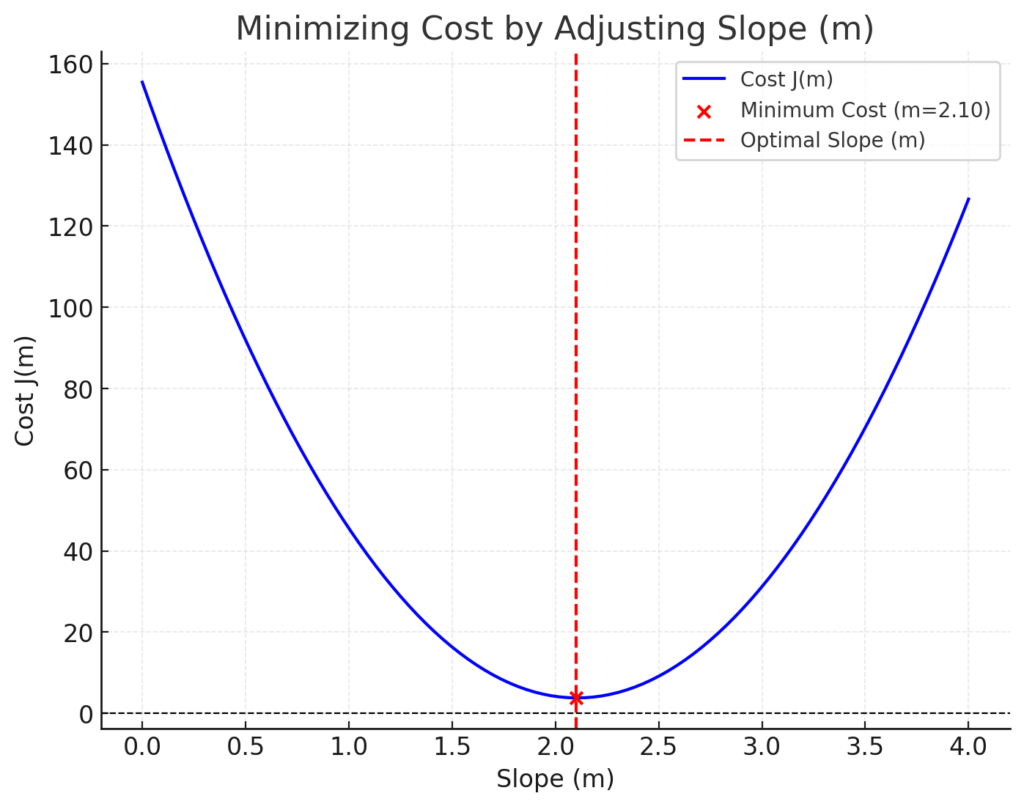

Set the derivative to 0 to minimize the cost

Solve for m

Rearranging terms

Note

Let’s look at both \(b\) and \(m\)

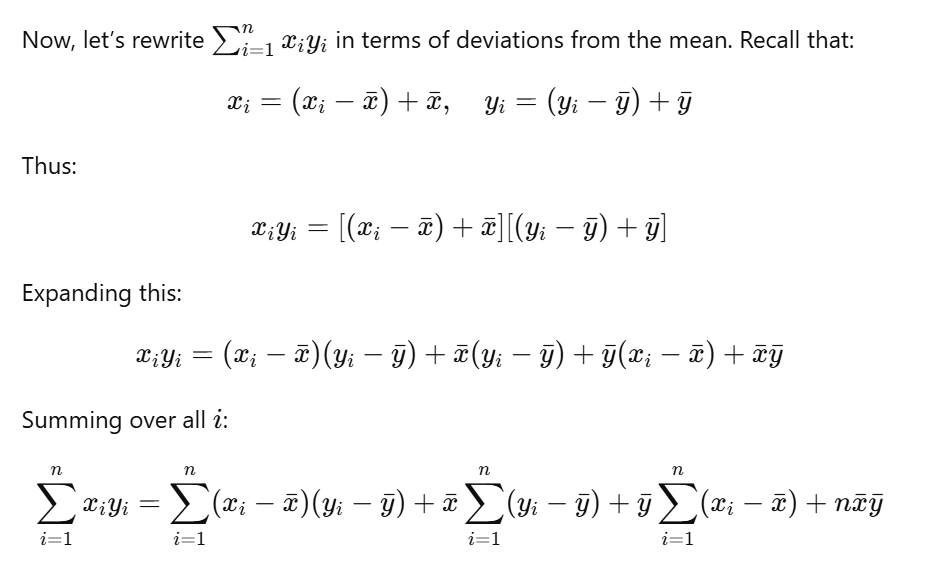

Rearrange numerator of \(m\)

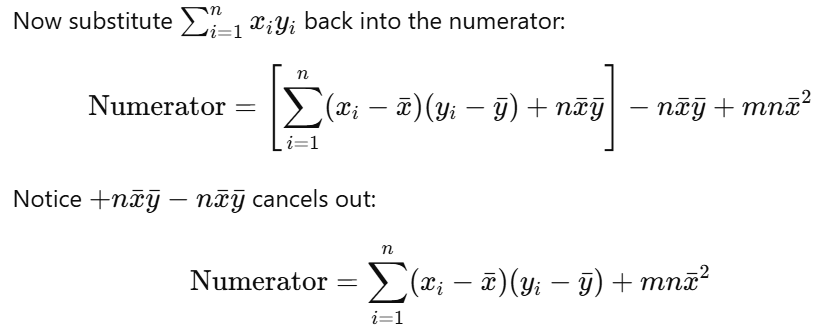

Substituting into numerator

Expand the numerator

simplified numerator

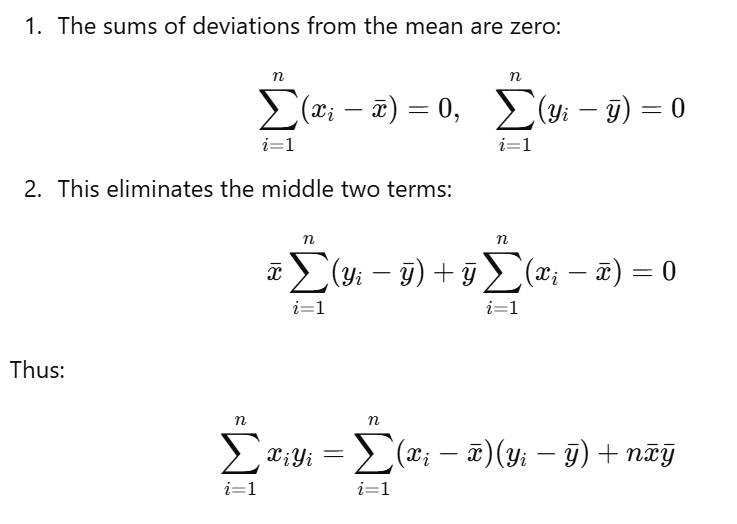

Key Simplifications

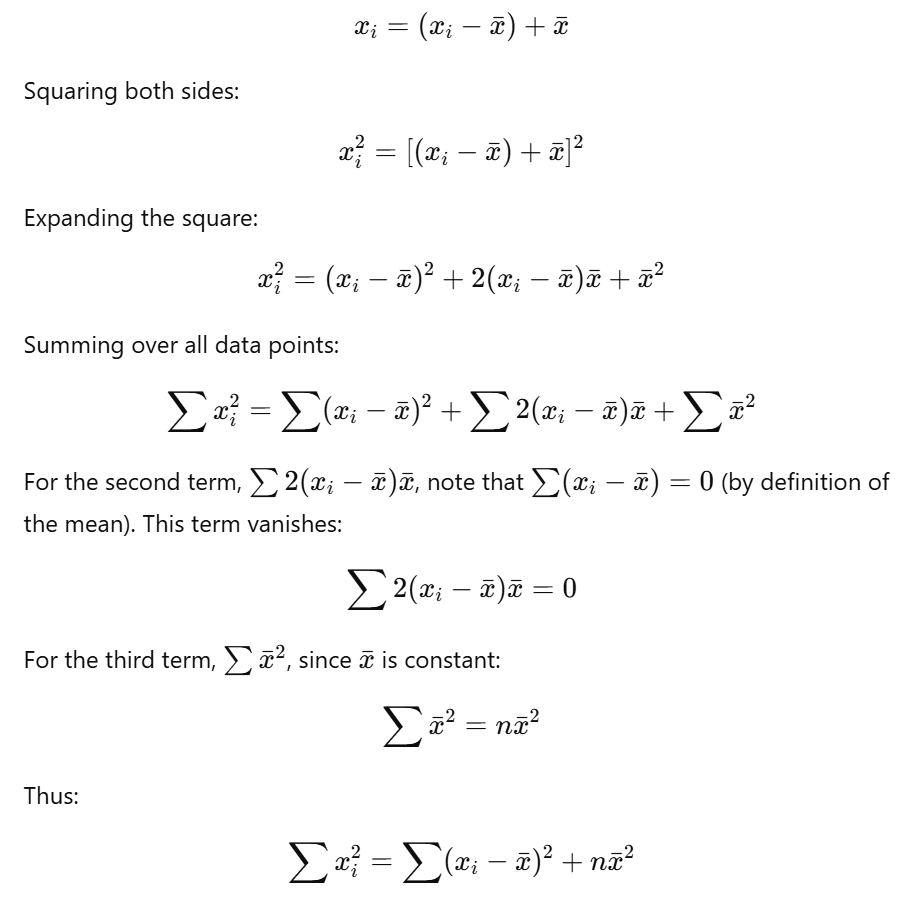

Rearrange denominator of \(m\)

Replace with rearranged numerator and denomiators

\( \ m = \frac{\sum_{i=1}^n (x_i – \bar{x})(y_i – \bar{y}) + mn \bar{x}^2}{\sum_{i=1}^n (x_i – \bar{x})^2 + n \bar{x}^2} \)

How \( mn\bar{x}^2 \) and \( n\bar{x}^2 \) balance each other

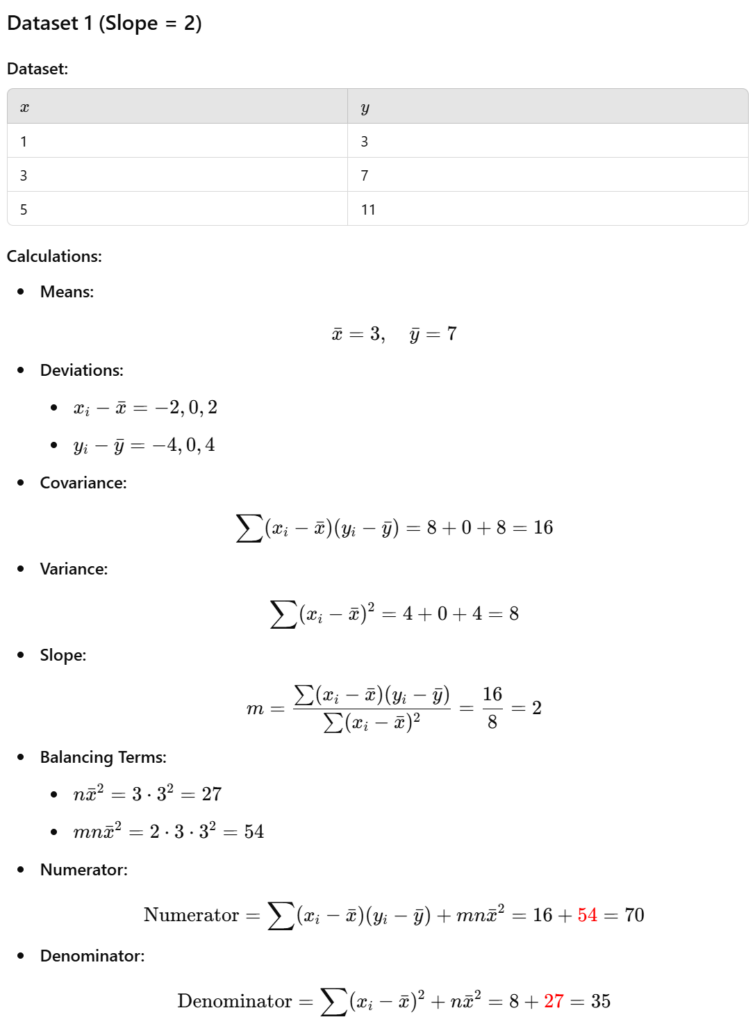

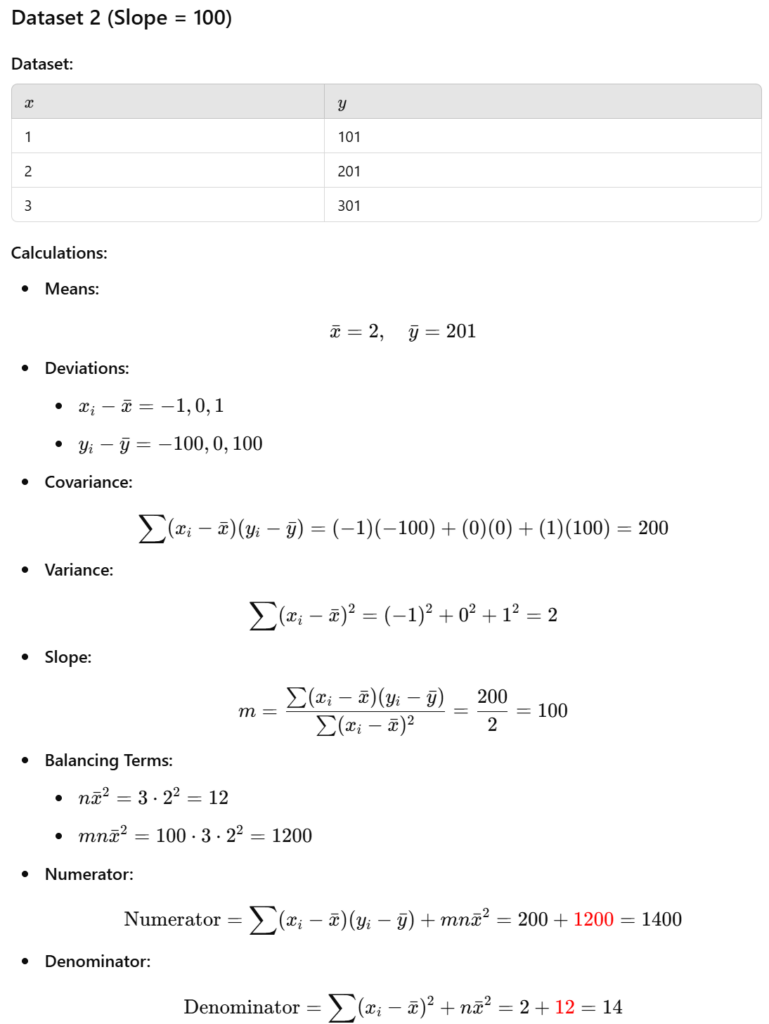

Let’s take 2 examples. In one slope is 2 and in the other slope is 100. Note that in both cases the terms \( mn\bar{x}^2 \) and \( n\bar{x}^2 \) (shown in red) don’t affect the value of slope

Note that \( mn\bar{x}^2 \) and \( n\bar{x}^2 \) balance each other. Due to this we can drop them from the slope expression to make it more intuitive and focused on the relationship between the deviations o x and y from their respective means

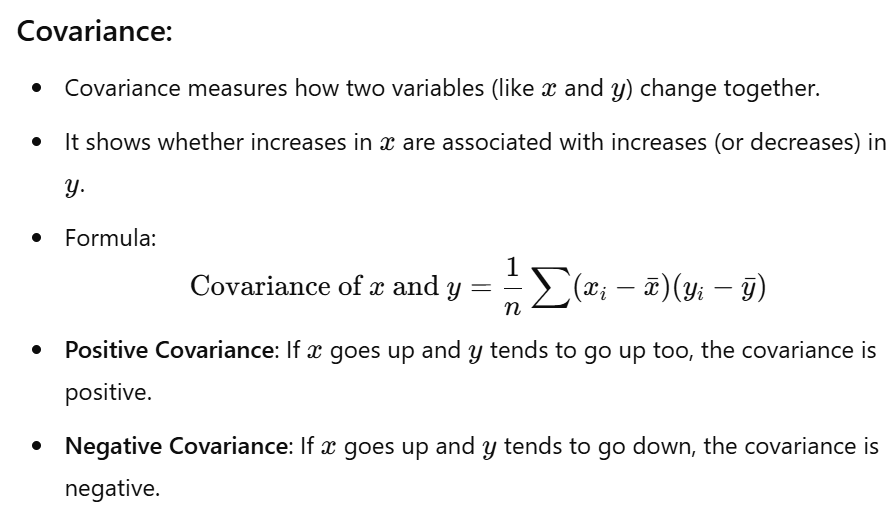

\( \ m = \frac{\text{Cov}(x, y)}{\text{Var}(x)} = \frac{\sum_{i=1}^n (x_i – \bar{x})(y_i – \bar{y})}{\sum_{i=1}^n (x_i – \bar{x})^2} \)

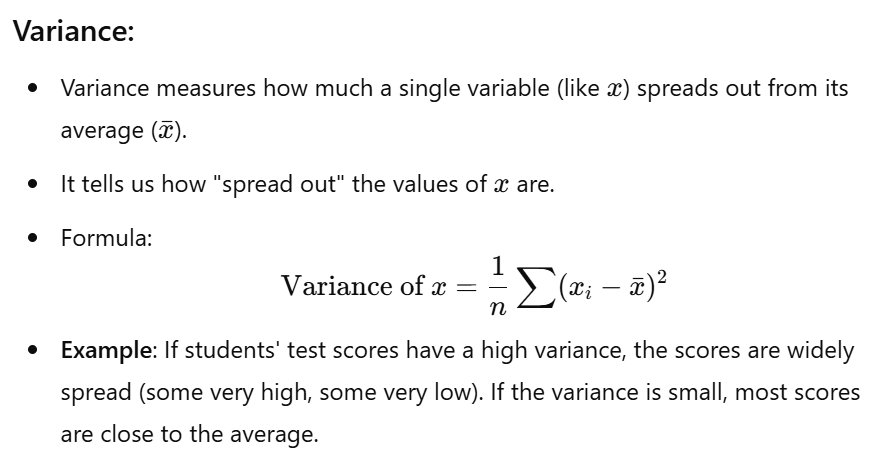

Fundamentals used in above derivation

Mean of x

Mean of y

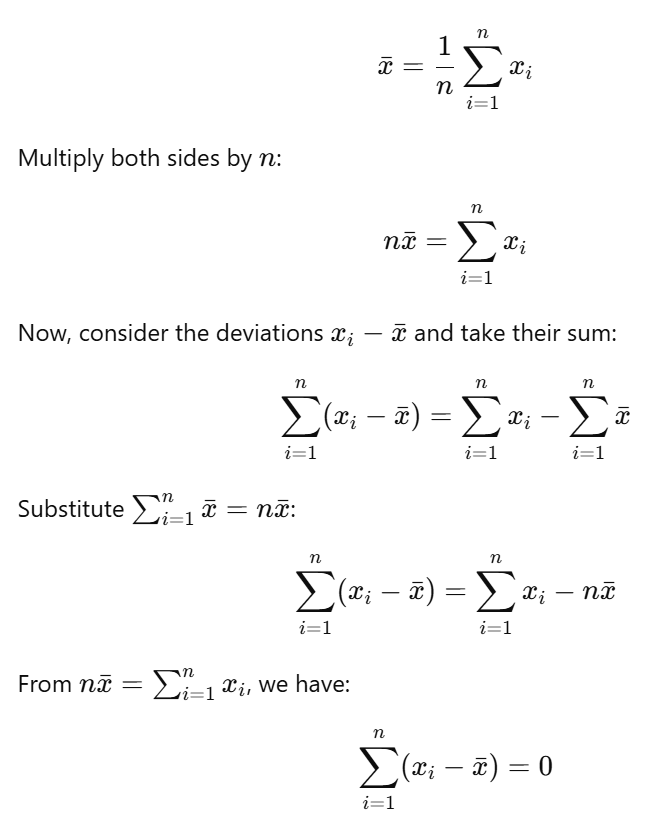

Sum of deviation from mean is zero

well articulated